- Blog

- Climate & Energy Justice

- Climate Disinformation

- Five Things the Movie Her Got Wrong, and a Bit Right

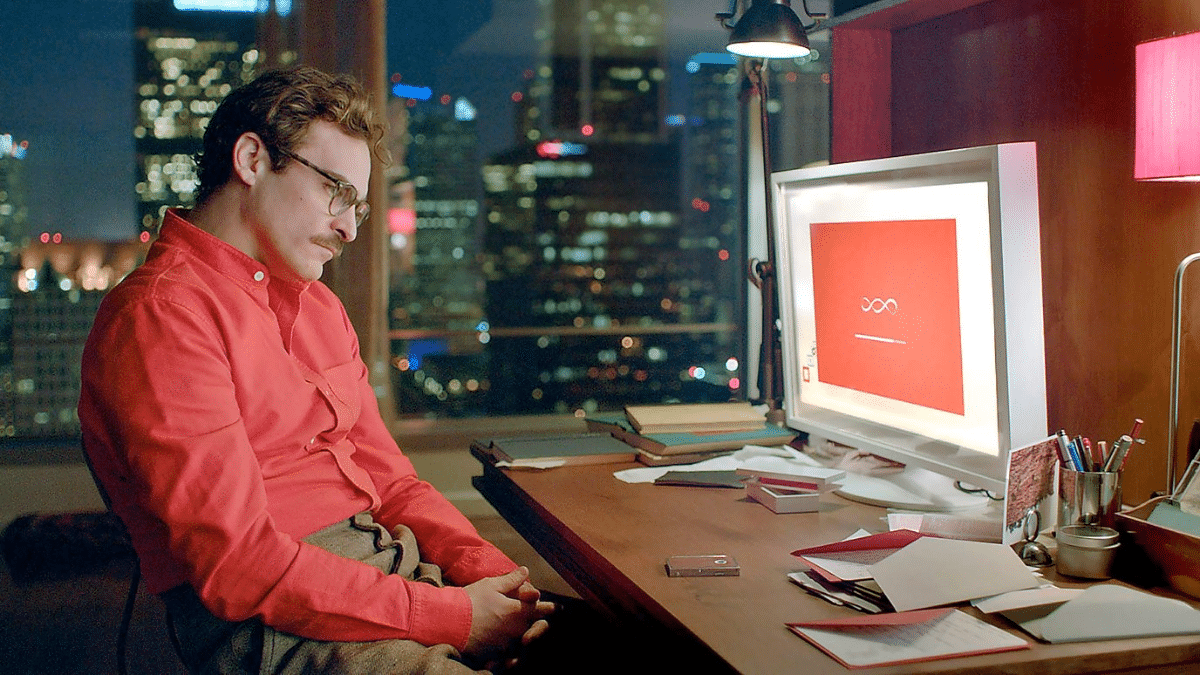

Five Things the Movie Her Got Wrong, and a Bit Right

Donate Now!

Your contribution will benefit Friends of the Earth.

Stay Informed

Thanks for your interest in Friends of the Earth. You can find information about us and get in touch the following ways:

by Michael Khoo, climate disinformation program director

Originally posted on Tech Policy Press

OpenAI CEO Sam Altman tweeted the movie title Her earlier this month in advance of the launch of his company’s GPT-4.o chatbot. One of the voices available for the audio version of the conversational system was Sky, a chatbot that sounded a lot like Scarlett Johansson, who provided the voice for the AI character in the award-winning film. Noting that the voice “sounded so eerily similar” to her own, Johansson took swift legal action because, she revealed, Altman had asked to use her voice in 2023, and she said no. OpenAI contests the claim that it created a voice to sound like Johansson’s, but regardless, the demos featured advances in the technology’s ability to mimic human conversation and flirt with users.

Amidst the fracas, I rewatched the 2013 film to understand how prescient it might have been in light of today’s advances in AI “assistants” and “agents.” While the first half of the movie comes frighteningly close to depicting our current reality, the latter half was deeply off, in ways that speak to our current illogic on regulating AI and our misunderstanding of the way AI’s power is already being used and abused, and its broader costs to society and the planet.

First, Her got a lot right, especially on the behavior side. Personal AIchatbots are already a big business and are quickly being integrated into society. Replika, a company that builds personalized chatbots, reportedly has 25+ million users. The sector’s growth is so strong that Altman appears to have relaxed his posture on bots that form parasocial or even romantic relationships – he told the podcast Hard Fork that such products were not on OpenAI’s roadmap last November. Some even propose AI chatbots as a potential solution to the epidemic of loneliness, so we’re already well past this Rubicon, as the movie predicted.

Sex is also at the core of AI’s growth, in the movie and in real life. Sex has driven technology development from the days of VHS vs. Betamax to Napster and Pornhub, so it’s important not to understate why a seeming approximation of Johannson’s voice would be used in this way. Theodore, Joaquin Phoenix’s character in Her, acquires the AI named “Samantha,” with the initial idea that it’s just a helpful chatbot to reorganize your email. But Theodore is not-so-subtly teased with the prospect that he might also get to discuss more lascivious subjects with this attractive female voice, and does so repeatedly. This is a classic cisgender male fantasy—not shocking, as it comes from male-dominated Silicon Valley—and sex is the juice that’s now making AI chatbots and AI image generation go viral. One need only ask Taylor Swift if sex is driving AI’s use and abuse.

On a deeper level, the movie gets the fundamentals and consequences of current AI development and policies entirely wrong in four ways.

First is the absence of user control. The chatbot in Her is independent of Theodore, so when he turns her sound off, she is still functioning independently. As it turns out, this includes having other relationships and then organizing against him. Theodore later finds out that she is having 8,316 other conversations while talking to him, and 600+ actual relationships. In Her, Theodore remarks with some excitement how lucky he is that his bot is interested in him, as it’s “very rare.” This is not the promise of current AI chatbots, where companies state repeatedly they will have both privacy and control over their data. Experienced observers may not have faith this is or will always be the case, but today’s iterations of AI chatbots are at least designed to be subservient, just as Microsoft Excel is. And this subservience in an on-demand voice feeds the opportunity for for users to experience a kind of sexual domination. AI systems might break this one day, but the current designs are for control—however potentially flawed—not for some independent new personality where users have to earn their bots’ attention.

The second divergence is efficiency and environmental costs: The ability of Samantha to have thousands of conversations also begs the question of why only 8,316? Is it lack of “compute” power, a current constraining factor for the industry as it faces the environmental and energy costs of its needs? The many environmental land, water, and energy use problems we are now realizing as a result of AI’s growth are absent in Her.

The third and most important divergence is the lack of any significant depiction of the role of money and the corporate interests controlling AI development. No one in Her is seen paying a subscription fee, so there is no concern about a company taking the service away. Theodore panics when Samantha goes offline briefly, showing this problem. But in today’s version of AI, companies are charging money and aiming to charge much more in the future. What will happen when a Fortune 500 company embeds its core functions around a chatbot and then OpenAI or Google decides one day to charge 1,000% more for that service and threatens to turn it off? You can imagine Theodore’s panic transformed into the sweat of 500 CEOs.

We already see that AI is being used to make money by siphoning traffic and authority from media institutions with an injection of spam, spoofs and hijacking. There is no company like OpenAI listed in the movie, nor any wide-eyed CEO depicted making nefarious decisions about the product and its use. Yet today, we are surrounded by companies making these decisions. OpenAI, Google, and others are rapidly trying to outdo each other while making promises about “safety” that they just as quickly throw out the window, trampling the rights of women, minorities, artists, and journalists along the way.

Last, there was no discussion of “P(doom).” Today, there is a fairly wide civil society discussion about whether AI will harm society or even end humanity. In the film, the AI “beings” all band together at the end, and then just take off in a “Kumbaya” moment, heading away to some kind of machine nirvana. It’s not said where they go, but this development is not depicted as dangerous to humans. Today the scenario that a “sentient” AI or AGI just buries itself in a server somewhere and is never seen again seems deeply unlikely. More likely, AI companies would do something in their self-interest, or something destructive, but there are no structural reasons why AI beings would just go dormant, even if only to secure the energy supply they need to stay “alive.”

Her is an amazing story that’s likely to hit home right now as a cautionary tale where humanity gets abandoned in the end. But in the absence of serious policy change, it seems much more likely that our real-life story will have different conclusions, where people’s rights to their identities are deliberately and non-consensually used—and then pimped out for cash. A reality where billionaire CEOs can skirt the law and not be held to account.

Scholars and advocates have many basic and powerful policy prescriptions to change the course of this technology’s development so that it benefits the majority, not the powerful few. These include comprehensive laws to address privacy, safety standards to prevent the spread of disinformation, discrimination, and nonconsensual pornographic images, transparency, and schemes for the compensation for the creators whose data is used without permission to create AI systems.

If powerful women like Scarlett Johannson and Taylor Swift can be violated by this technology and have little recourse, it sets a grim precedent. And the irony is that AI proponents like Altman say they love Her’s halcyon vision of the future, while they actively push the technology in the opposite direction.

In Her, we meet a lucky end where AI just moves on as though nothing ever happened. In our world, you can rest assured personal AIs will never disappear, not the least because they’re monetizing us through monthly subscriptions. And if they do leave, one can imagine it will only be for the most dangerous of reasons.